AutoPlace: Robust Place Recognition with Single-Chip Automotive Radar

(2022 IEEE International Conference on Robotics and Automation)

Kaiwen Cai1, Bing Wang2, Chris Xiaoxuan Lu3

1University of Liverpool, 2University of Oxford, 3University of Edinburgh

Abstract

TL;DR: We propose a place recognition for single-chip automotive radar, making the use of Doppler velocity measurement, RCS measurement and a spatical-temporal nerural network.

This paper presents a novel place recognition approach to autonomous vehicles by using low-cost, single-chip automotive radar. Aimed at improving recognition robustness and fully exploiting the rich information provided by this emerging automotive radar, our approach follows a principled pipeline that comprises (1) dynamic points removal from instant Doppler measurement, (2) spatial-temporal feature embedding on radar point clouds, and (3) retrieved candidates refinement from Radar Cross Section measurement. Extensive experimental results on the public nuScenes dataset demonstrate that existing visual/LiDAR/spinning radar place recognition approaches are less suitable for single-chip automotive radar. In contrast, our purpose-built approach for automotive radar consistently outperforms a variety of baseline methods via a comprehensive set of metrics, providing insights into the efficacy when used in a realistic system.

Video (3 min)

Methods

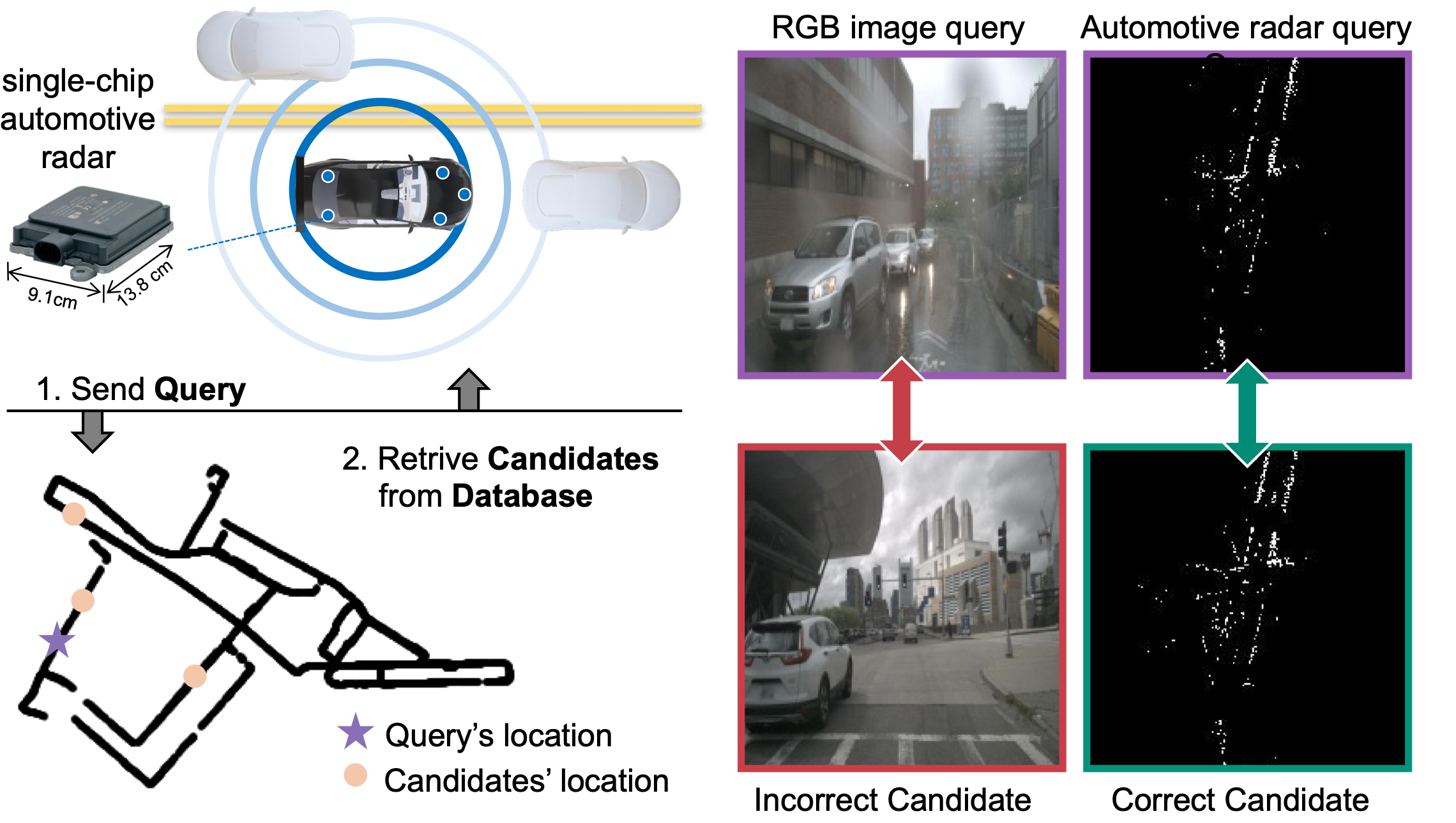

Place recognition using the single-chip automotive radar: given a query (marked in purple ) acquired from the same place on a rainy day, the state-of-the-art RGB camera-based place recognition(NetVLAD) failed to retrieve the correct candidate due to raindrops blocking the camera, while the proposed AutoPlace successfully retrived the correct one.

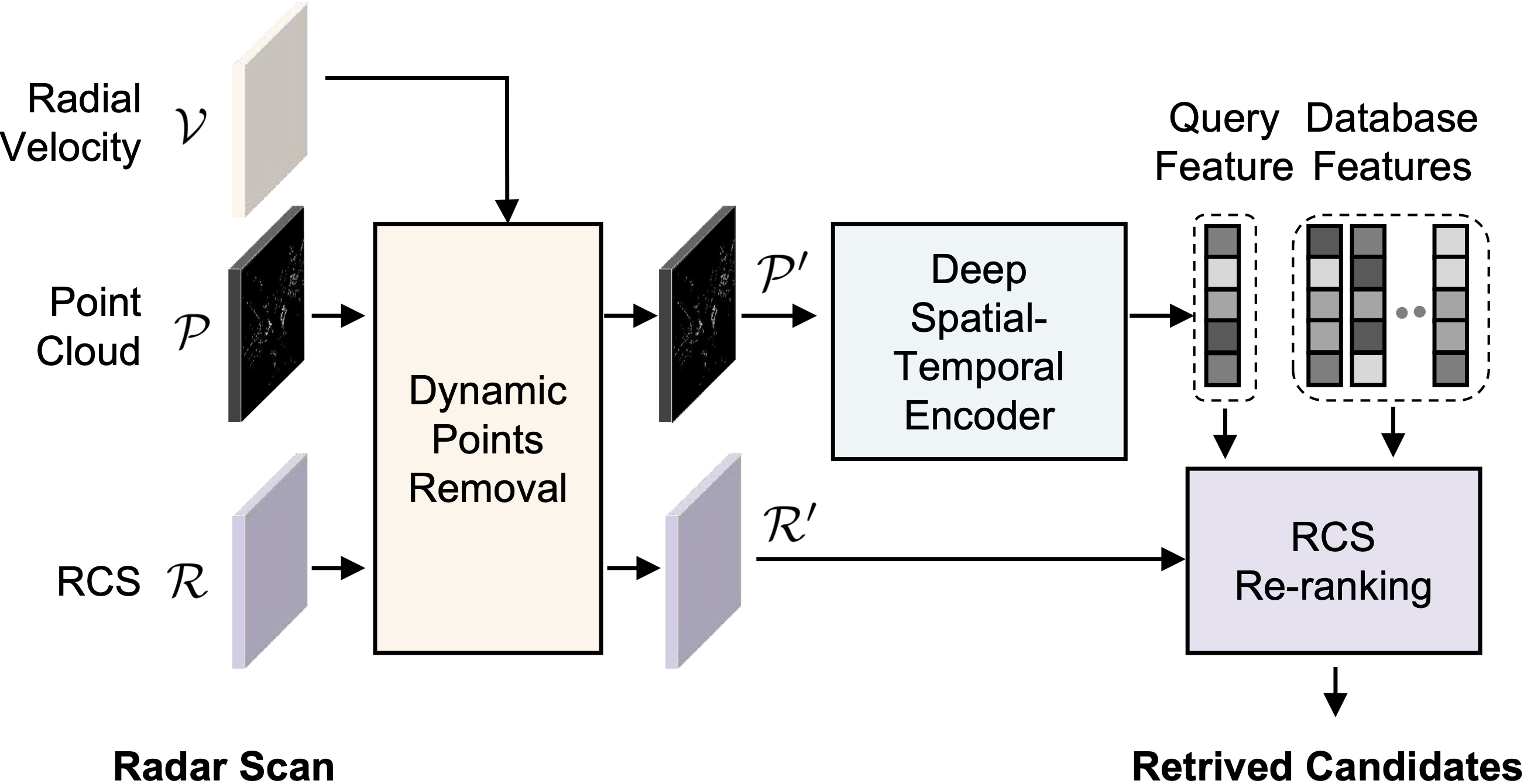

Overview of AutoPlace: radial velocity, point cloud and RCS measurement of a radar scan are utilized by the proposed Dynamic Points Removal (DPR) method, Deep Spatial-Temporal Encoder (SE & TE) and RCS Histogram Re-ranking (RCSHR) method, respectively.

Dynamic Points Removal: Remove dynamic points based on radial velocity: the left figure shows the points' radial velocity distribution measured by the front radar in a single radar scan, and the right is the front-view images from the front camera, the bird view radar point cloud from the front radar, respectively. The two dynamic points (the moving cars) are successfully identified by the proposed DPR method and marked in red .

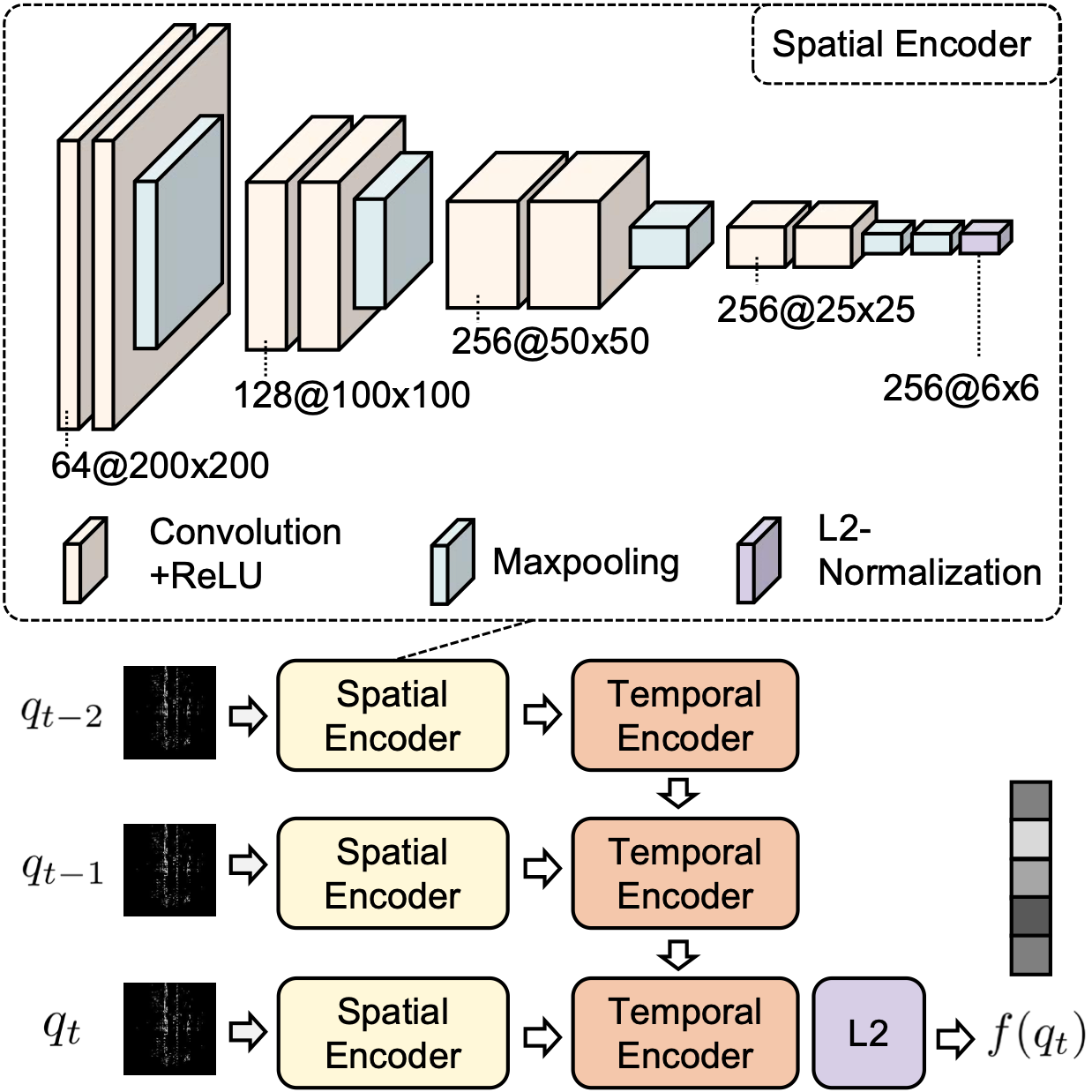

Spatial Encoder & Temporal Encoder: Overview of the proposed deep spatial-temporal encoder (SE & TE) : in the Spatial Encoder diagram, the block color reflects layer type while the block size indicates the layer's output size, which is annotated as channel@width×height.

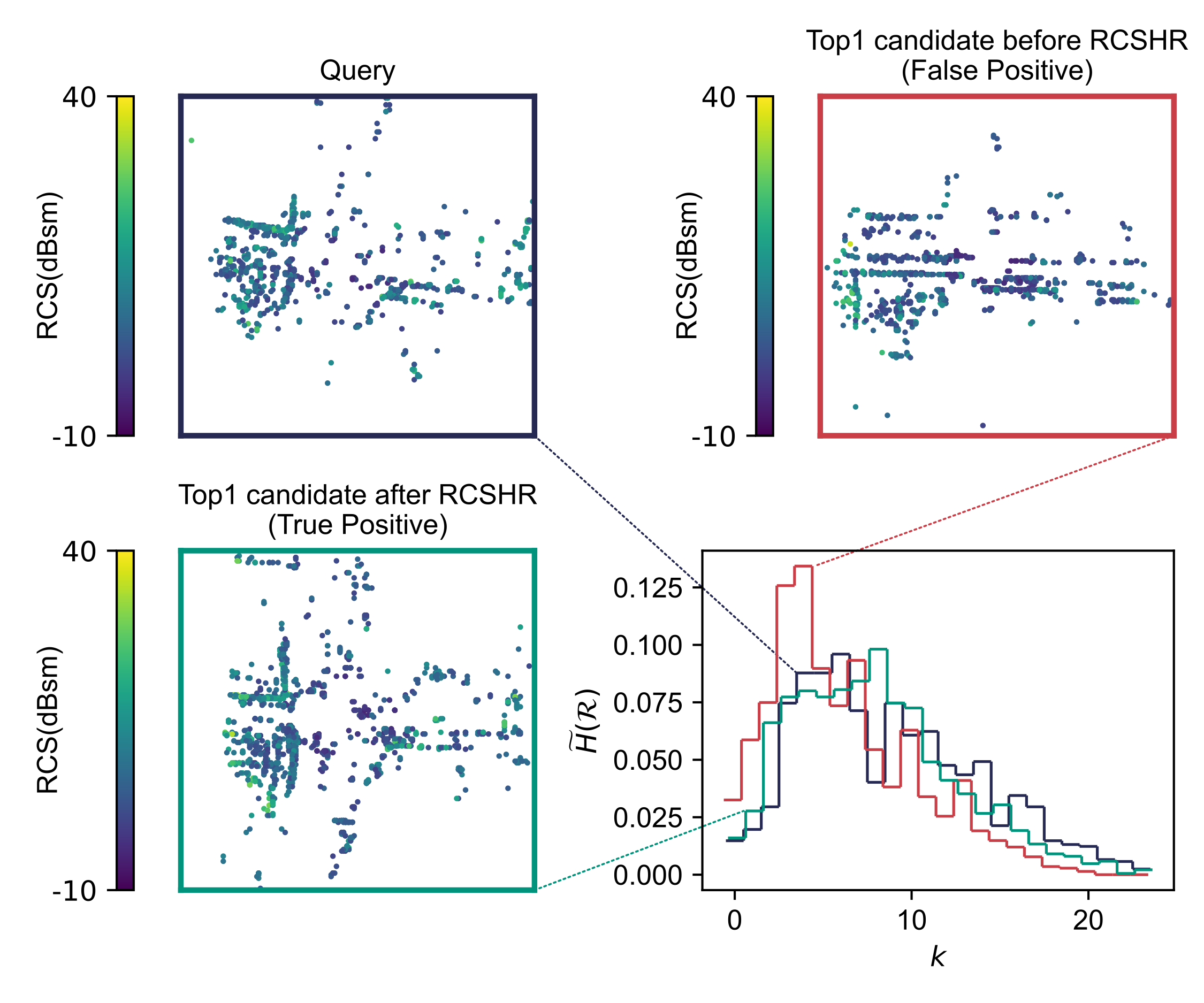

RCS Histogram Reranking: The lower right figure is the RCS histograms of a query and two candidates, while others are their bird view radar images. Given a query, the retrieved top-1 candidate before RCSHR is a false positive; after performing RCSHR based on their RCS histograms, we identified the true positive candidate. (we use point size larger than 1 pixel for better visualization)

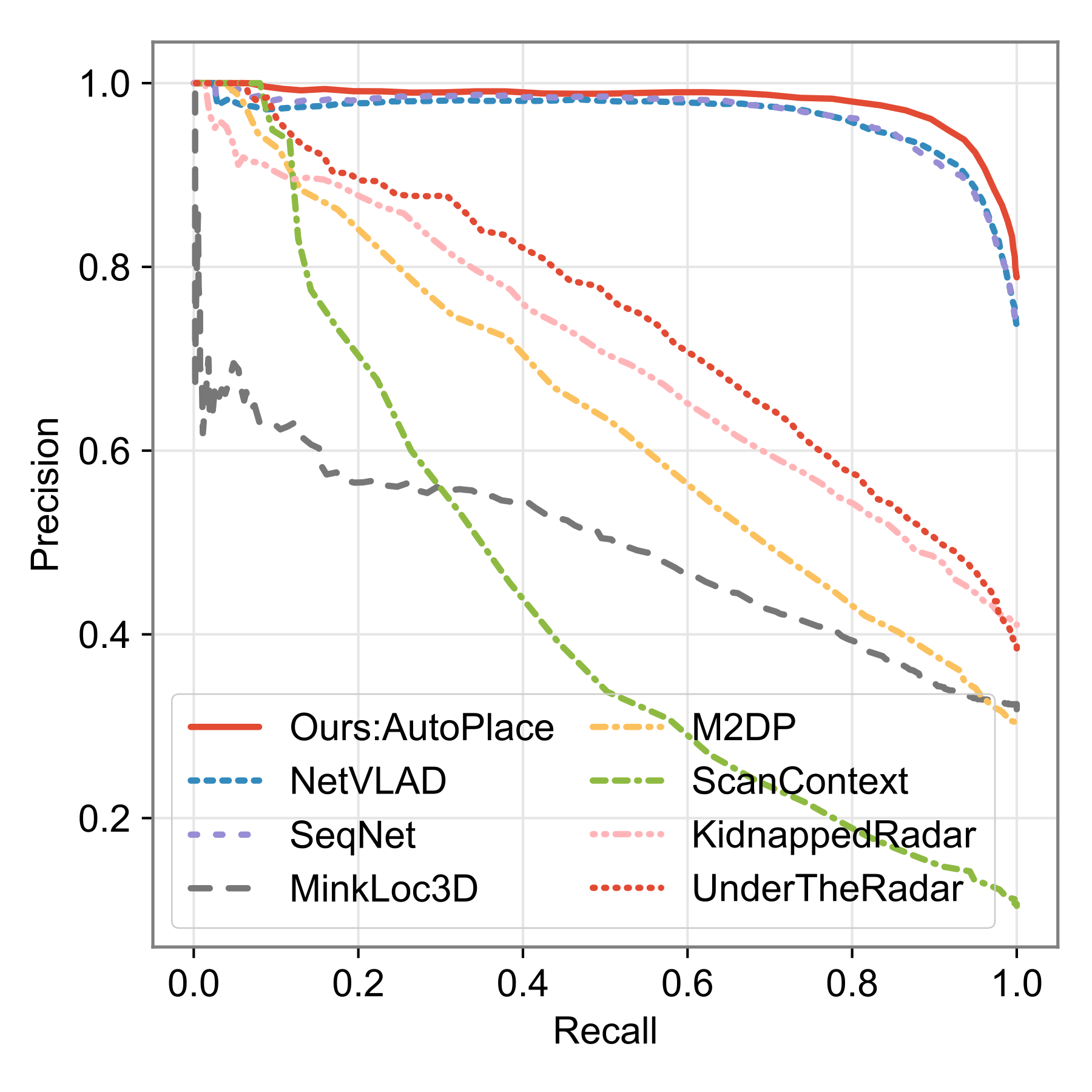

Precision-recall curve of SOTA methods on the nuScenes dataset. AutoPlace achieves the best performance.

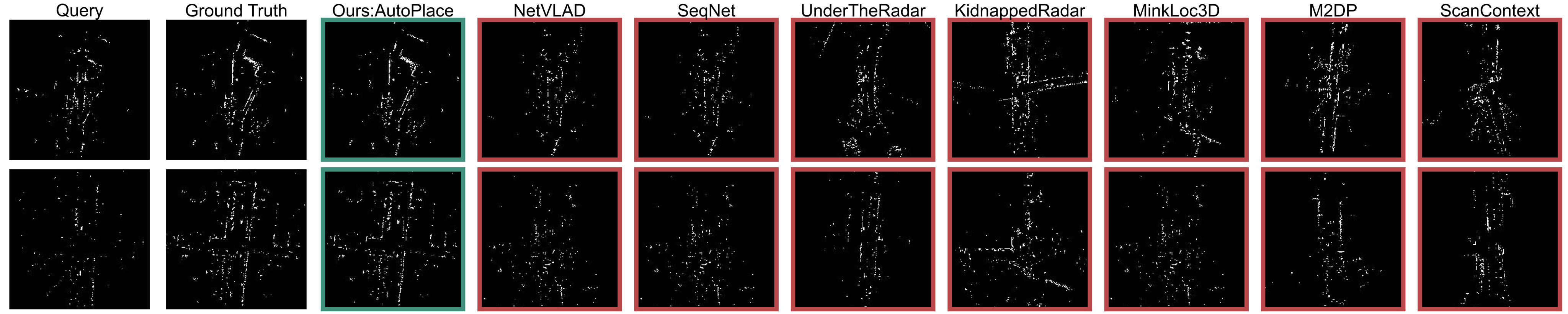

Qualitative analysis of SOTA methods. The first and second columns show the query radar images and ground truth radar images, and the other columns are the retrieved top 1 candidate via different methods. Green means the retrived candidate is a true positive, while red denotes false positive.

Place recognition performance on nuScenes dataset.