Self-Adapting Large Visual-Language Models to Edge Devices across Visual Modalities

(The 18th European Conference on Computer Vision ECCV 2024)

Kaiwen Cai1, Zhekai Duan1, Gaowen Liu2, Charles Fleming2, Chris Xiaoxuan Lu3

1University of Edinburgh, 2Cisco Research, 3University College London

Abstract

TL;DR: we enable large language-visual models like CLIP to work with non-RGB images on resource-constrained devices.

Recent advancements in Vision-Language (VL) models have sparked interest in their deployment on edge devices, yet challenges in handling diverse visual modalities, manual annotation, and computational constraints remain. We introduce EdgeVL, a novel framework that bridges this gap by seamlessly integrating dual-modality knowledge distillation and quantization-aware contrastive learning. This approach enables the adaptation of large VL models, like CLIP, for efficient use with both RGB and non-RGB images on resource-limited devices without the need for manual annotations. EdgeVL not only transfers visual language alignment capabilities to compact models but also maintains feature quality post-quantization, significantly enhancing open-vocabulary classification performance across various visual modalities. Our work represents the first systematic effort to adapt large VL models for edge deployment, showcasing up to 15.4% accuracy improvements on multiple datasets and up to 93-fold reduction in model size.

Video (~40s)

Methods

The adaptation problem of large visual language model to edge devices across visual modalities. We use a resource-constrained cleaning robot as the edge device for illustration. The robot has a co-located RGB and depth cameras, generating many paired images without scene labels. Using RGB-depth pairs as the inputs and the pre-trained image encoder in CLIP as the teacher, EdgeVL is designed to transfer the knowledge to a small student encoder without labels or human intervention. After this learning process, the student encoder can agnostically process either image modalities for open-vocabulary scene classification on the device.

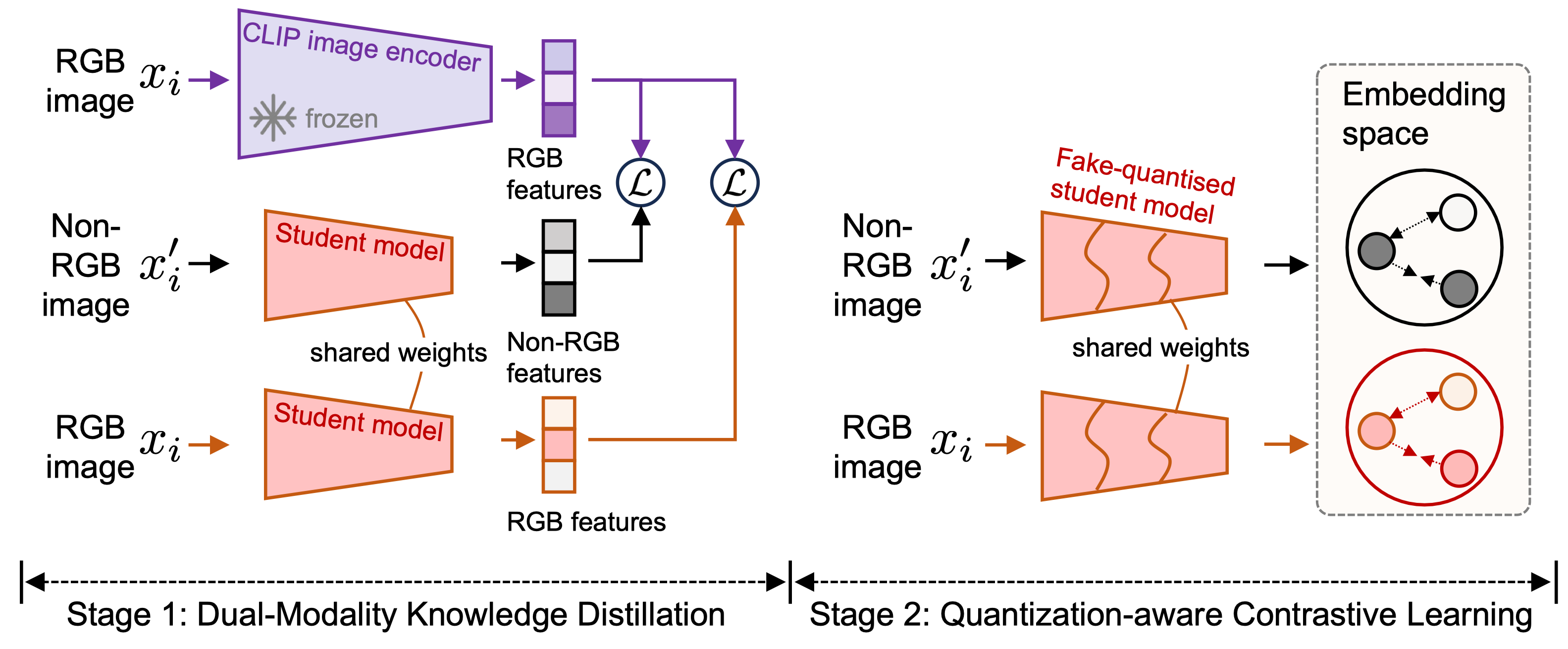

Overall architecture of our proposed method. In stage-1, we distill the knowledge from the pre-trained visual encoder to the student model. In stage-2, we first fake-quantize the pretrained student model, then use contrastive learning to refine the student model.

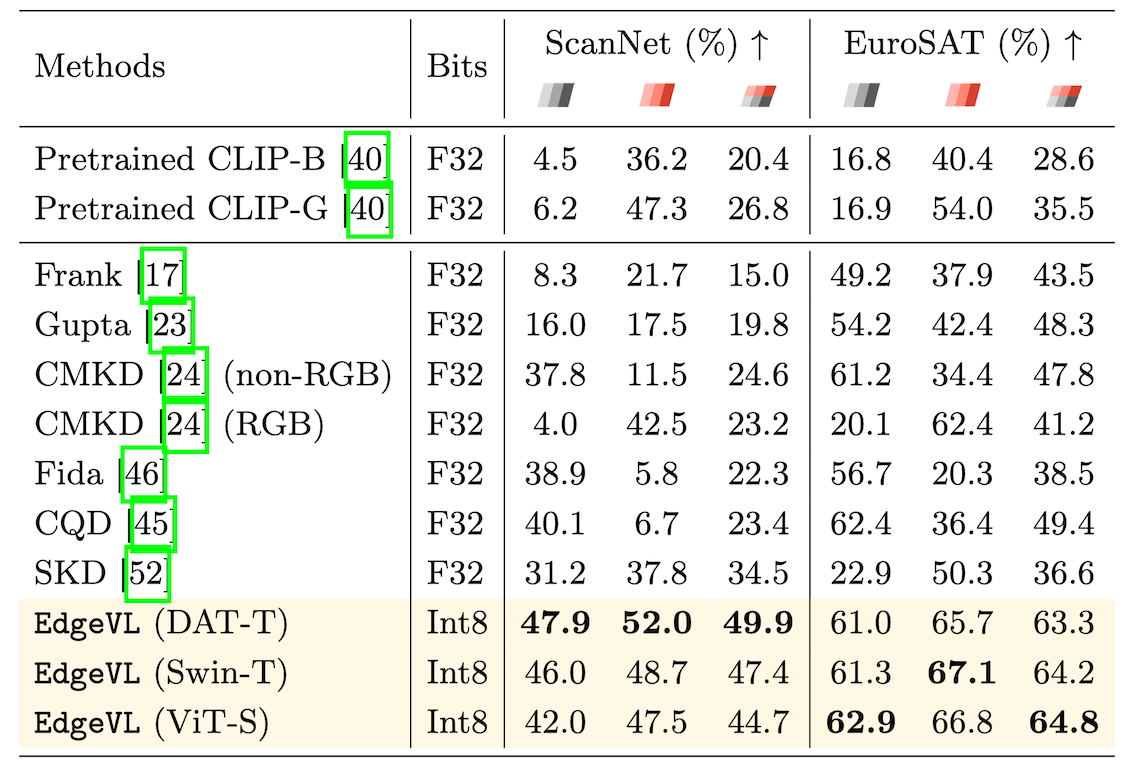

EdgeVL works with popular backbones such as DAT, SwinTransformer, and ViT. Regardless of the backbone chosen, EdgeVL outperforms other methods in terms of open-vocabulary classification accuracy.

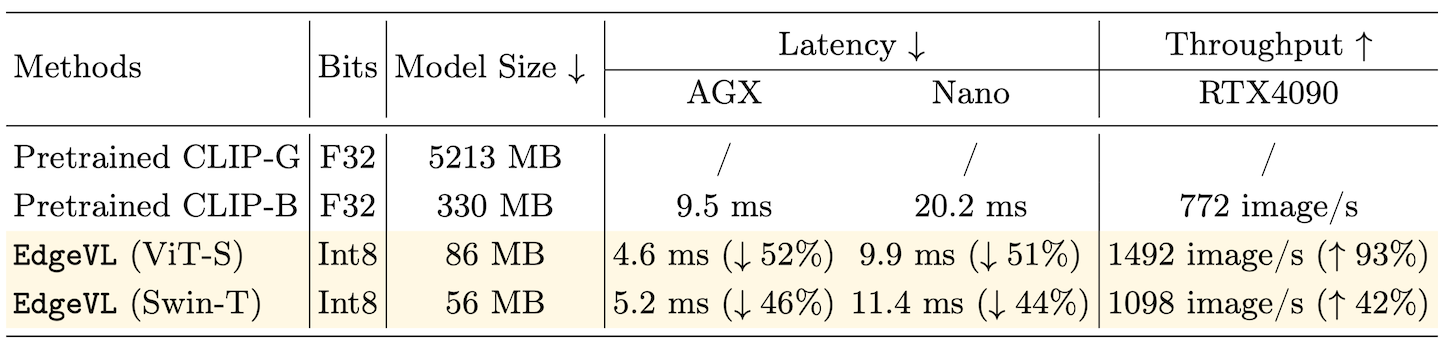

EdgeVL cuts the inference latency in half.

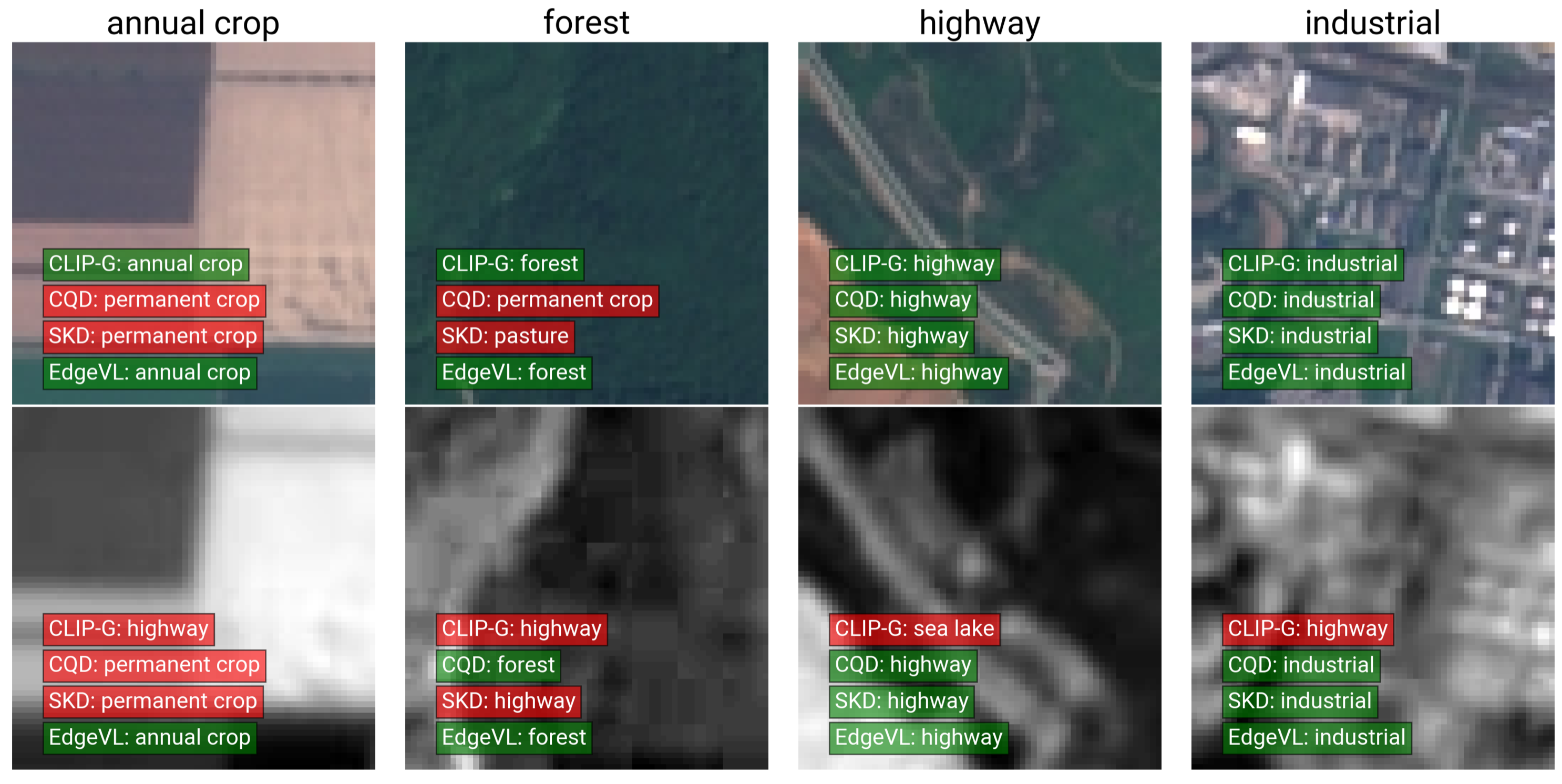

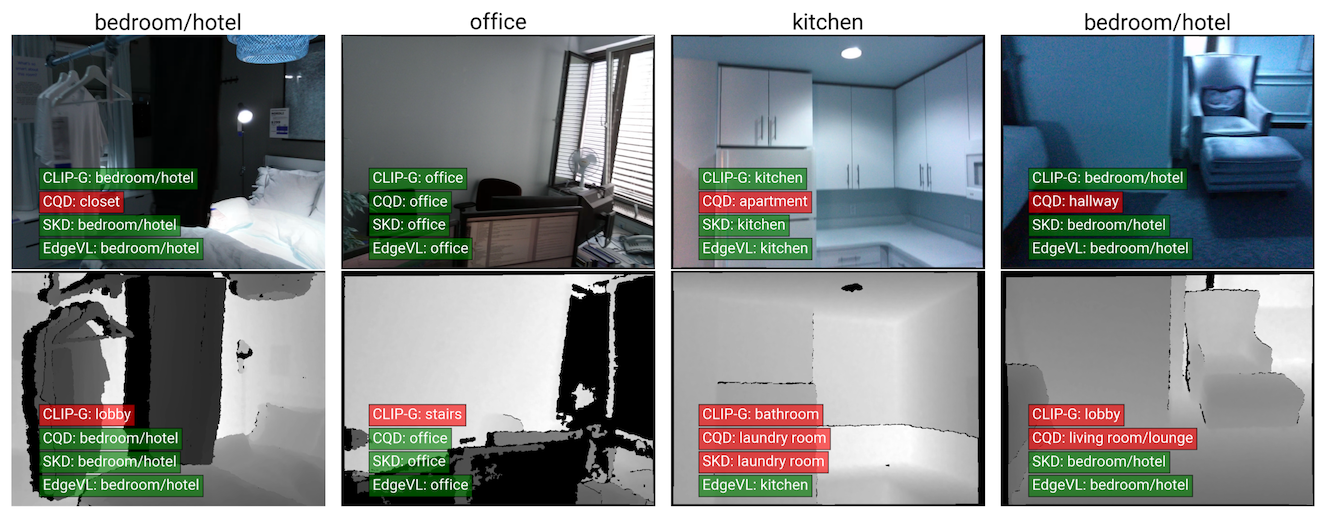

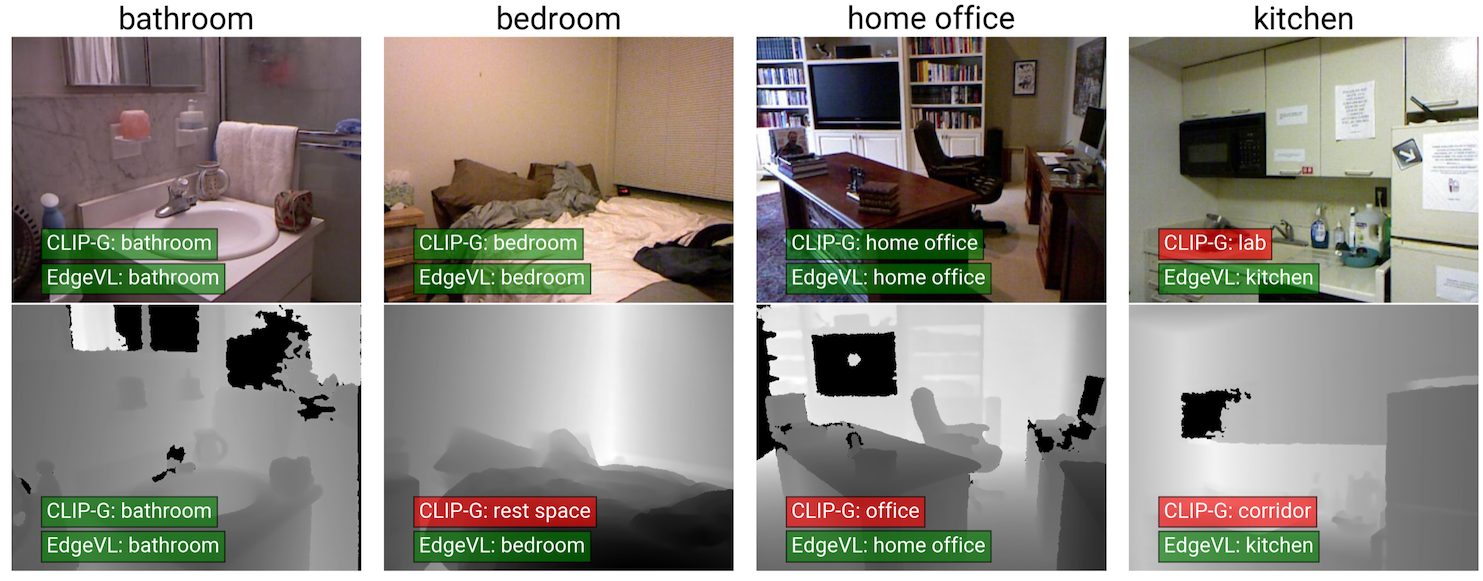

Below are visual examples of the predictions made by CLIP-G, CQD, SKD, and EdgeVL on the EuroSAT, ScanNet, and SUNRGBD datasets.